Part 1: Current Practices for Security Program Management

Blog Post

Part 1: Current Practices for Security Program Management

Demetrios Lazarikos (Laz)

June 2, 2022

This is the first of three blog posts about our recent survey on security program management. This post discusses some of the survey findings about current practices. The next two will cover priorities for making SPM better and conclusions and recommendations.

The full report is available here: Security Program Management: Priorities and Strategies.

June 15th Webinar

Security Program Management: Priorities & Strategies Research

What Did We Learn?

Peer Data on Security Program Management

Security program management has been recognized as an essential discipline for security leaders at least from the early 2000s, but there is surprisingly little data available to answer questions such as:

- How often do organizations assess the effectiveness and maturity of their security program, and what frameworks and standards do they use to help?

- How often do security leaders meet with their executives and boards of directors to communicate priorities and investment needs?

- What best practices have security leaders developed for managing their programs, and what kind of enhancements would help them do it better?

To answer those questions and more, Blue Lava and AimPoint Group commissioned a survey of 268 CISOs, CIOs, and senior security and risk managers. In this post we look at their responses concerning current practices.

How Often Do Organizations Assess Their Programs?

Ongoing visibility into the maturity and effectiveness of a security program enables security leaders to continuously improve performance. It also helps them keep updated conditions, such as emerging threats and new technology initiatives. So how often do they perform assessments?

The data shows a mixed picture. About 19% of organizations do an assessment only when required by an audit or other special event, and 26% based on the choice of each group in IT. About 20% assess about once a year, 22% several times a year, and 13% continuously or every time the program changes.

What Frameworks and Standards Do Organizations Use?

Most organizations benchmark their security programs against some type of framework or standard.

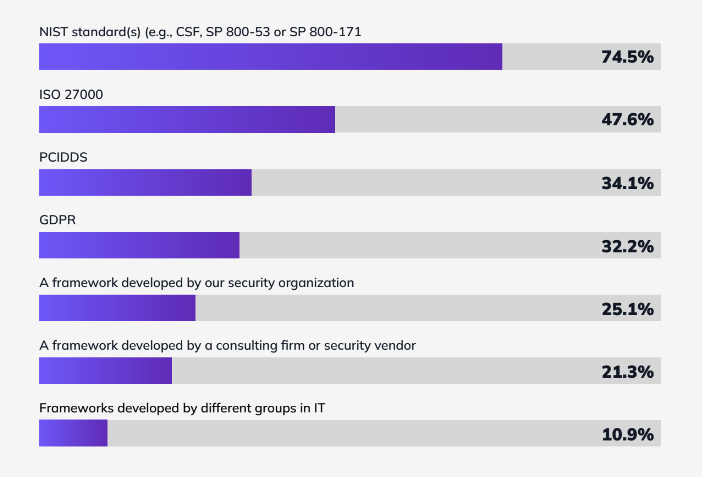

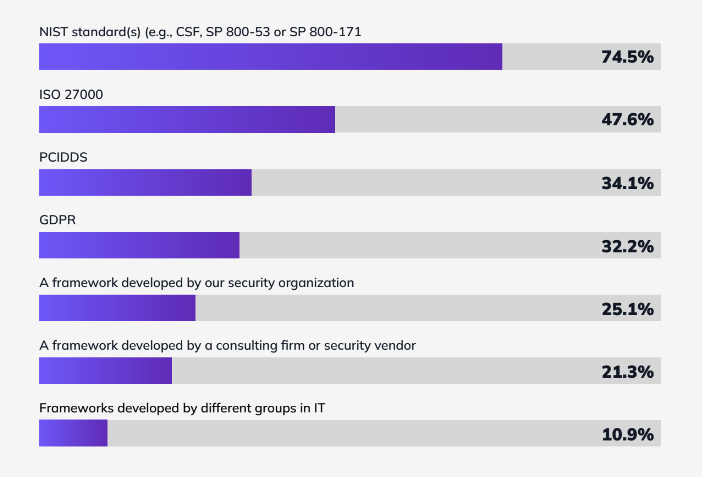

The most widely employed? By far standards from NIST, used by three out of four organizations, and ISO 27000, used by nearly half (48%). These were followed by the PCI DSS and the EU GDPR standards (34% and 32%, respectively). The adoption of these standards is not surprising, given that compliance with one or more of these are required to sell to U.S. federal agencies and defense contractors, handle credit card data, and do business in Europe.

What about frameworks created specifically to assess and manage security programs? These were used by substantial numbers of organizations, although fewer than the compliance-oriented standards. Twenty-five percent of security teams used frameworks created by the organization’s security group, 21% frameworks developed by consulting firms or security vendors, and 11% frameworks developed by different groups within IT.

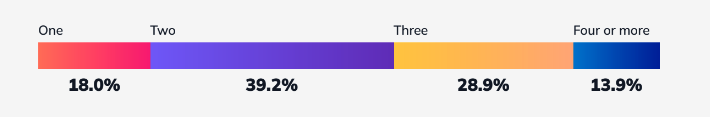

As you can tell by the fact that these figures add up to much more than 100%, most organizations are using multiple frameworks and standards. In fact, most are using two (39%) or three (29%).

How Often Do Security Leaders Meet with Their Board of Directors – And How Much Time Do They Spend Preparing?

Good news: Security leaders have an unprecedented degree of visibility and influence in boardrooms, because security is now considered an integral part of business strategy.

This trend is supported by our survey data about the frequency of meetings between security leaders and their boards of directors. Today, a wide majority meet either quarterly (37%) or monthly (40%). Only a fraction talk to their board only annually (6%) or “rarely or never” (0.4%).

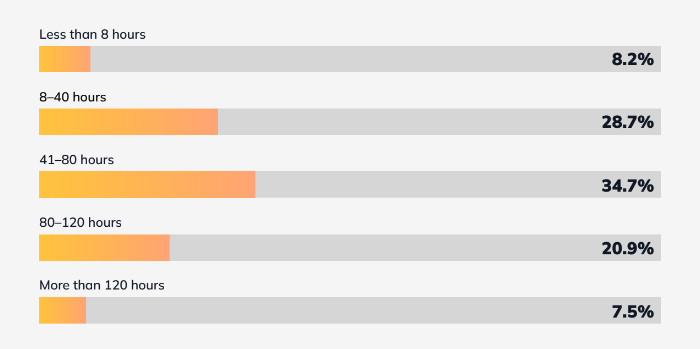

Not such good news: security teams are subject to much more intense scrutiny, so they need to devote a lot of time to compiling data about security maturity and effectiveness and preparing presentations. In fact, the median time allotted to these activities by the survey respondents or their colleagues is between one and two weeks (41-80 hours) for each meeting. In fact, 21% of respondents report that their teams spend two to three weeks (80-120 hours) preparing for each meeting, and 8% report more than 120 hours.

How Do Organizations Use Peer Benchmarking Data?

A full 92% of the survey respondents reported that their organization uses peer data to benchmark their security program performance.

What exactly do they use it for?

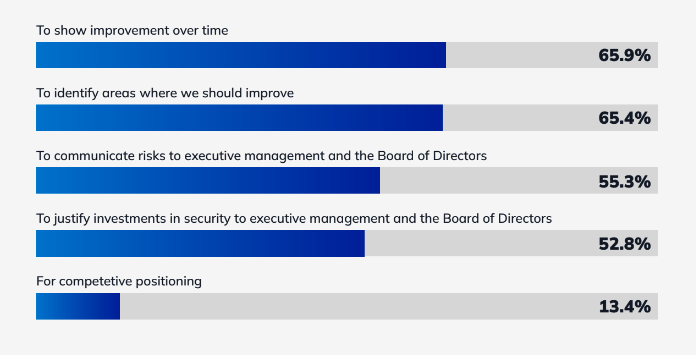

- Roughly two-thirds use it to show the improvement of their program over time (66%) and to identify areas for improvement (65%).

- Over half use peer benchmarking data to communicate risks to their management (55%) and to justify investments in security (53%).

- A smaller group (13%) use the data to help with competitive positioning.

Don’t forget to check back for the next two posts in this series, which will summarize priorities for future improvements in security program management and conclusions from the survey and recommendations. For more data and analysis on security program management practices and priorities, download the full report at: Security Program Management: Priorities and Strategies.